Piccolo AI is structured around a five-step workflow for constructing working edge IoT inference models tailored to your specific application’s training dataset. These steps are summarized below.

Acquire Input Sensor Dataset

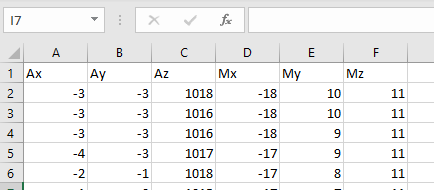

Piccolo AI creates sensor-driven pattern recognition models using labeled time-series training data you supply. This data is imported into the tool using either .CSV files or .WAV audio files, with labeling handled in the Data Manager tab.

Piccolo AI can import any time-series data where the individual sensor channels are separated by commas and each successive sample in time is a separate row. The .CSV file should include a header row with the sensor channel names as shown below.

For enhanced dataset creation and management productivity, SensiML also offers Data Studio, a paid ML DataOps tool that complements Piccolo AI with features like streaming data capture direct from your embedded device, video annotation, multi-user collaboration, automated labeling, and much more.

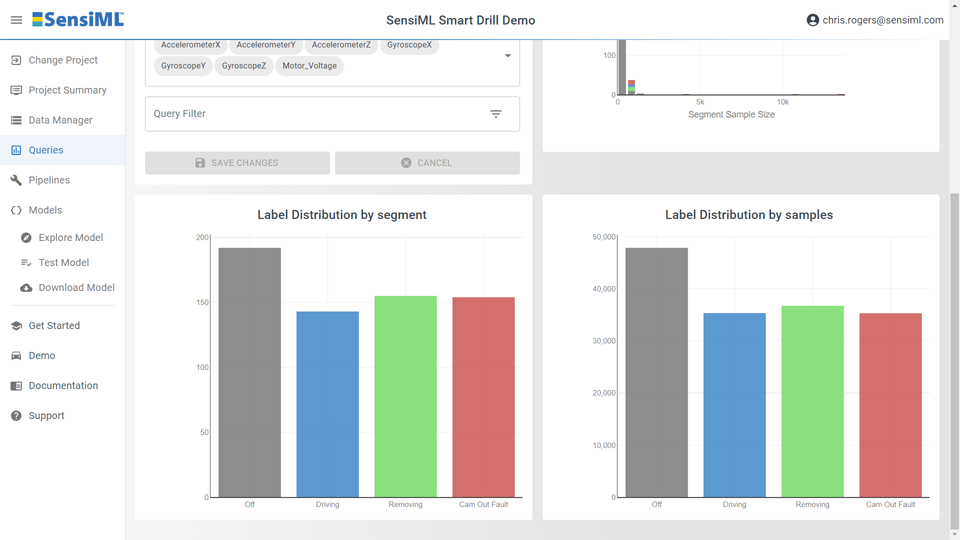

Define Dataset Query

After establishing a labeled dataset, Piccolo AI’s Query feature is used to define a subset of available data, sensors, and metadata for training the model. This provides the flexibility needed to create multiple model variants based on specific factors in the overall dataset as well as for partitioning the dataset between training and testing data.

Piccolo AI supports the ability to create user-specified metadata along with your sensor data and labels. This metadata can then be used to establish subsets of the overall dataset used to create model variants.

For example, a smart tennis racket product could be configured to utilize a separate model for right-handed versus left-handed users. Generating such model variants is a simple matter of defining metadata driven query filters and rerunning the pipeline to produce tailored models for various subpopulations.

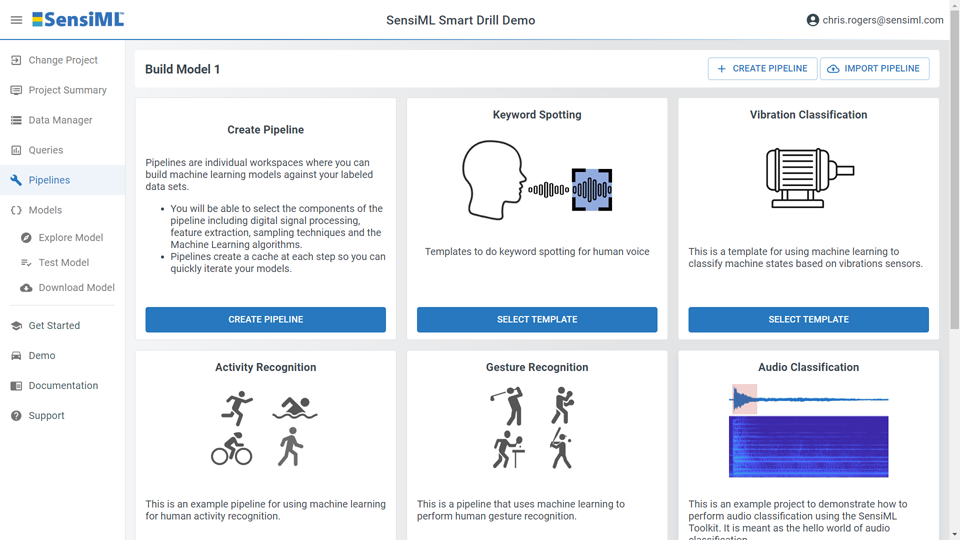

Construct Model Pipeline

Piccolo AI provides a powerful array of options for converting labeled sensor data into functioning ML models. The most basic approach is utilizing one of the pre-defined Pipeline Templates for common use cases. With these templates, the key model pipeline parameters have already been selected and optimized, and all that is required is to run them against your supplied dataset.

Piccolo AI supports the following pipeline templates by default:

- Human Voice Keyword Spotting

- Machinery Vibration Classification

- Human Activity Recognition

- IMU-based Gesture Recognition

- Audio Event Classification

SensiML continues to add new templates and the open-source community can contribute additional templates as well.

For use cases not already covered by a pre-defined template, you can create your own pipeline from scratch and optionally create a new template based on your custom pipeline configuration.

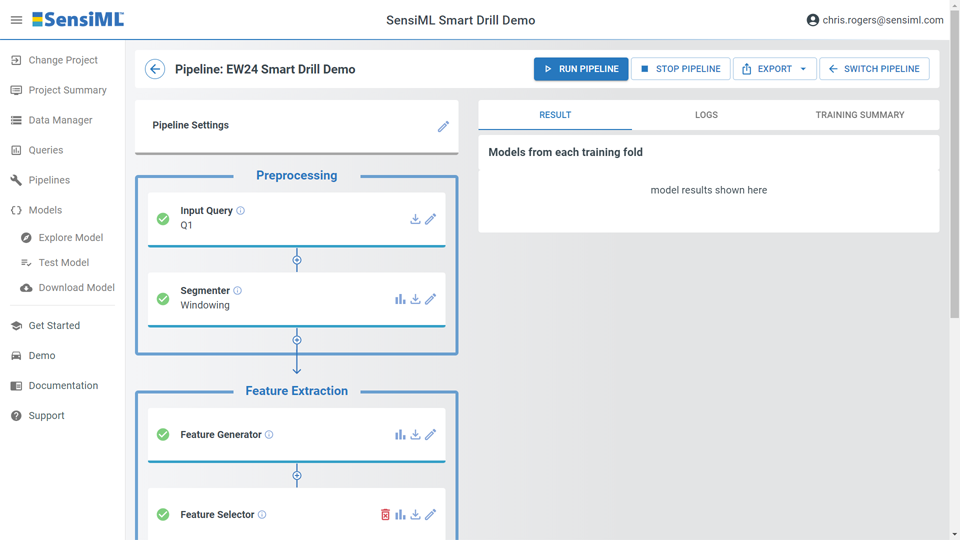

All Piccolo AI models provide a comprehensive and customizable pipeline for processing raw sensor data into feature transformed, classification, or regression output on the edge device.

The Piccolo AI model pipeline includes the following processing stages:

- Signal Pre-Processing (filtering, downsampling, averaging, scaling, normalization)

- Event Triggering (threshold, sliding-window, peak detect)

- Feature Transformation (over 80 different suported transforms, or create your own)

- Classification (SVM, PME, Trees, NNs)

- Regression (linear regression)

Pipelines can be built using either the GUI interface or Python SDK.

The intuitive graphical UI shows the processing stages and provides the means to add/remove steps and configure the parameters for each stage in the pipeline. Helpful hints on the impact of each parameter provide useful guidance for expert users and novices alike.

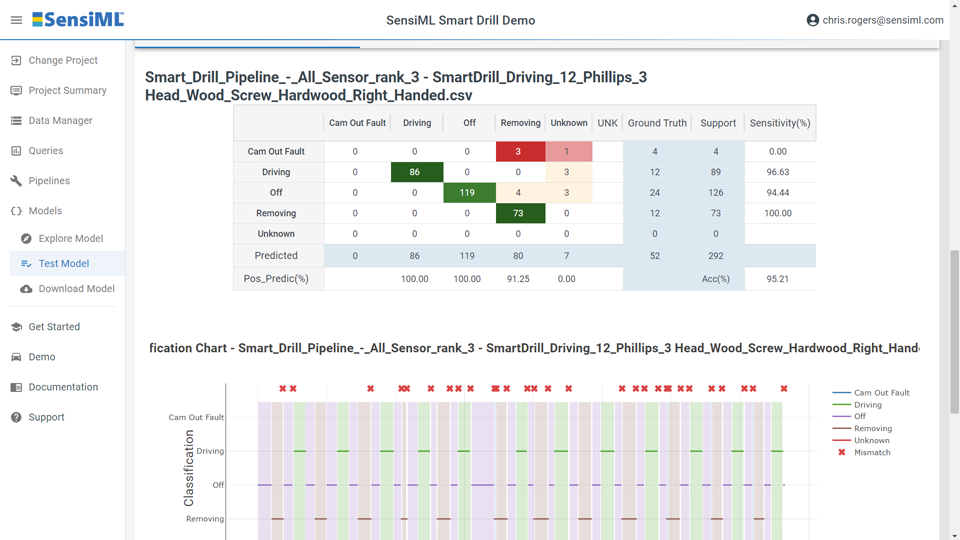

Test Model Performance

Once you have completed building a model, Piccolo AI makes it easy to assess the model’s performance. Model visualization, analysis, and testing can be performed using a variety of tools before ever needing to integrate and test firmware empirically on the edge device itself. This saves valuable time and provides quick insight into model behavior and areas for improvement where necessary.

Piccolo AI provides the following model evaluation analyses:

- Confusion Matrix Table

- 2D Feature Plots

- UMAP, PCA, and TSNE Analysis

- Feature Vector Distribution Plots

- Learning Curve Plots

- Pipeline Summary Diagrams

At this stage, partitioned test data can be rapidly analyzed using automated test features and new data imported as desired to assess model generalization.

Generate Device Code

The final step in the Piccolo AI workflow is generating appropriate and suitably sized firmware code for your desired target device.

Piccolo AI is unique in its ability to support a broad array of MCUs, ML-optimized SoCs, and a variety of architectures. Simply choose amongst the supported list of platforms and then define your desired code generation configuration parameters. Piccolo AI will then generate a .ZIP archive containing your customized ML inference model for your chosen device in either binary, linkable library, or C source code format as desired.

Piccolo AI code generation provides the following optional parameters:

- Format (binary, library, source code)

- Target Compiler Revision

- Processor Variant

- Floating Point Support (none, SW, HW)

- Hardware ML and SIMD Accelerator Use

- Debug Data Option

The Code Generation step also provides code profiling estimations with statistics on SRAM, stack, and flash memory requirements as well as processing latency for model execution.